Different types of coffee beans have distinct flavors that can be enhanced or diminished during the roasting process. By recognizing the type of bean being used, a coffee machine can adjust its brewing parameters to bring out the best flavor in each type of bean.

Approach

The use case presented is based on a coffee bean dataset to recognize the level of roasting. The solution proposes a model trained on a public dataset providing very good accuracy while running on an STM32.

This model was trained thanks to the Python scripts provided in the STM32 model zoo (read our tutorial on the training to learn more).

Here, after setting up our STM32 Model Zoo project, we downloaded the dataset and placed it in the appropriate structure.

Then we updated the yaml file, which was used to configure the training, with the following settings:

general:

project_name: coffee_bean

dataset:

name: coffee

class_names: [Green, Light, Medium, Dark]

training_path: datasets/train

validation_path:

test_path: datasets/test

Feel free to adjust those settings according to your use-case.

Finally, we simply ran the “train.py” script.

You will find everything you need in the STM32 model zoo to train and retrain the model with your own data. The model can also be easily deployed on a STM32H747 discovery kit with the Python scripts provided in the repository (read our tutorial on the deployment to learn more).

You can find details of all these steps in our Getting started video.

Sensor

Vision: Camera module bundle (reference: B-CAMS-OMV)

Data

Dataset: Coffee Bean Dataset Resized (224 X 224) (License CC BY-SA 4.0)

Data format:

There are four roasting levels. The green or unroasted coffee beans, a lightly roasted coffee bean, a medium-roasted and dark roasted. The camera is set at a location with a plane parallel to the object’s path when photographs are being captured. There are 4800 photos in total, classified in 4 degrees of roasting and resized to a resolution of 224×244. There are 1200 photos under each degree.

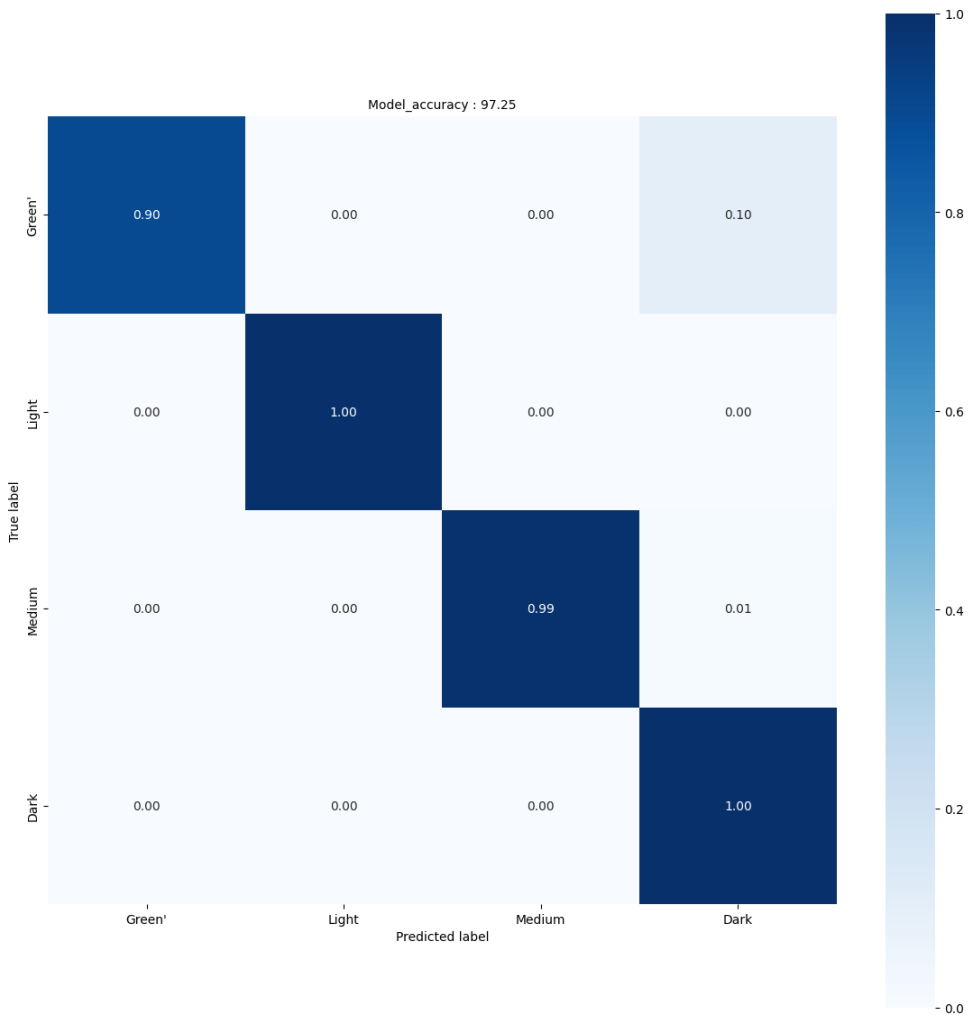

Results

Model: MobileNetV2 alpha 0.35

Input size: 128x128x3

Memory footprint of the quantized model:

Total RAM: 260 KB

- RAM activations: 224 KB

- RAM runtime: 36 KB

Total Flash: 528 KB

- Flash weights: 406 KB

- Estimated flash code: 122 KB

Accuracy:

Float model: 97.75%

Quantized model: 97.25%

Performance on STM32H747 (High-perf) @ 400 MHz

Inference time: 107 ms

Frame rate: 9.3 fps